With maintenance on automated test cases taking up a good portion of our clients time we thought it would be a good idea to layout some best practices for building out rock solid automation that will be both easy to understand at a glance and maintain with minimal effort. This section is a continuance of this post in which we began to discuss the initial building out phase of a proper test automation implementation requirements. For a links to all articles in the series please use this link: Best Practices for Achieving Automated Regression Testing Within the Enterprise

2.6 Parametrize Your Scripts (Data Driven Testing)

The use of variables in your test cases can exponentially expand your test coverage in certain areas. Take for example the testing of a simple login page. You might want to test the system against a number of different login types or account details or privileges after login or whatsoever. With the use of a good data set and parametrized test cases you can test all of these combinations from a single test script and associated data table.

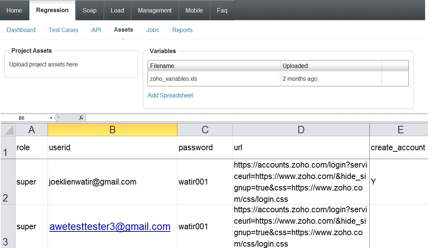

Awetest fully supports data driven testing allowing the tester to upload data tables to an ‘Assets Library’ where the data set can be pulled into the script greatly expanding the possibilities for your automation coverage.

2.7 Keep the Tests Short

Create Small Tests – Nothing can slow down a regression cycle more than long jam packed tests. If you can test the same functionality that you want with a set of tests it is always better than one large test because results will be quicker and reruns can happen faster if needed.

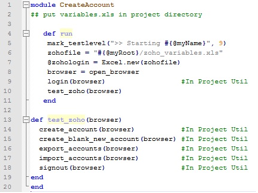

***Remember that test cases and methods can be stored in the project’s utility file and then be called in one line of the script.

Sometimes it is not always feasible to keep things very short. For example, if each script requires an extensive login and validation process and the actual test case is <50% of the script then it makes sense to combine multiple test cases. If you are going to be incorporating multiple test cases, be sure to use the marktest level command/function (see Add Traceability Section for more info on marktest level) to breakup your reports by test case and have improved traceability.

2.8 Use Strong Element Locators

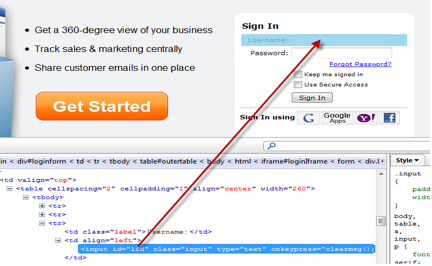

Use Strong Element Locators – Most modern web application elements have a number of ways to be located by the browser, but it is important to remember that all locators are not created equal. That is, some locators are better for gaining the handle of an element than others. For example, you can access elements using screen coordinates but those can change depending on the resolution size of the machine, making coordinates a less desirable attribute than say class or id. ID, Name, Class, Link, Text or Partial T/ext/ are the strongest locators to use in your scripts but you can also use Index, XPath, Child Elements, CSS Properties, JS Events, DOM Elements, KeyStrokes, Page Coordinates. Below is an example of how to find the element locators using a browser with developer tools enabled. In this example the ID for the ‘Username’ textfield is ‘lid’. We can then gain the handle to the element using something like set_textfield_by_id(browser, “lid”, “hello world”) .

Points 2.9 and 2.10 after the jump!

2.9 Build “Patient” Scripts

Wait for the Right Thing – When a wait until method to pause your script until an element appears, it is always better to take the positive confirmation approach. That is, wait for a success message rather than an error message to appear. This will ensure your test is not needlessly waiting the length of the timeout for an error to appear before continuing on. Awetest comes packaged with a number of methods to help users wait for the right thing. These can be found on the Awetest API page in the utility files – Legacy or Legacy Extensions.

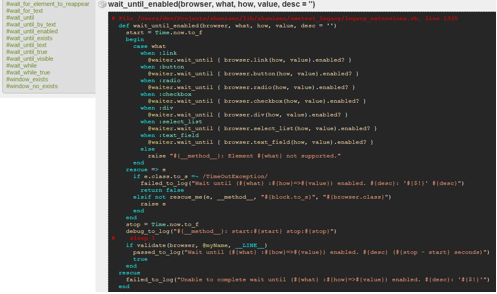

Included Wait Methods from the Awetest DSL like wait_until_enabled or wait_until_text

2.10 Reduce/Eliminate Data Dependencies

In this respect test automation should really have a similar motto to the military. Think of a good test case as one that leaves no data behind (unless needed later). Since testing software invariably creates new data a good test case will address that data creation with a deletion method from within the same test case. This mean if you are testing the create account functionality of your application during a daily end to end regression run in a few different scripts with the same method you do not have to worry about duplicating / overwriting / or the stock piling of data. Since each test case does its best to clear the data out before the next case starts this should help to reduce or eliminate data dependencies.

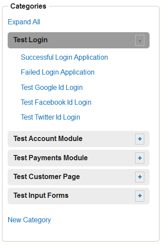

In the above Category/Test Case list – you want to try and ensure that “Failed Login Application” test case is not going to be dependent (need any data or conditions set) in the “Successful Login Application” script for two main reasons:

1. Issue with the script 1 – it will lead to cascading and misleading (false positives) in Script

2. Ability to run discrete scripts – you want to be able to selectively run scripts, etc.