Visit this post for an index for our Blog Series – Best Practices for Achieving Automated Regression Testing Within the Enterprise

Build: Building Automated Test Cases to Get the Job Done

With maintenance on automated test cases taking up a good portion of our clients time we thought it would be a good idea to layout some best practices for building out rock solid automation that will be both easy to understand at a glance and maintain with minimal effort. This section is a continuance of this post in which we discussed the building out phase of a proper test automation implementation requirements. For a links to all articles in the series please use this link: Best Practices for Achieving Automated Regression Testing Within the Enterprise

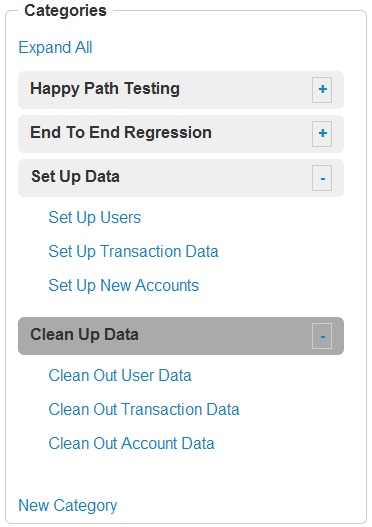

2.11 Revert back to original Data Conditions (wherever possible)

Data Consistency is the Bane of every tester’s existence. Let’s say you kick off a job and half way through setting up the data you wanted it dies, this being well before the script was able to reset all the data it created itself. That’s why Awetest is set up to deliver “Smart” Cleanup Scripts built to reset data and ensure maximum consistency.

2.12 Build App-Specific Library

Sometimes custom functions might be needed to properly test a given test case. The Awetest framework is fully expandable by allowing you to write your own custom test methods using raw Watir, Selenium, or our native Awetest language and then implement your customizations through an external application utility file managed in the ‘Assets Tab’ of the Awetest Regression Module.

Here is an example of the login method stored in this projects utility file.

Make custom code reusable (reusable custom functions save time later, regularly move any custom methods into a project utility file so they can be called in one line from your test scripts. This will build a robust custom library for the project that will make future scripting much faster and easier. Awetest lets you manage project utility files from the ‘Assets Tab’ in a given project)

2.13 Test & Deploy

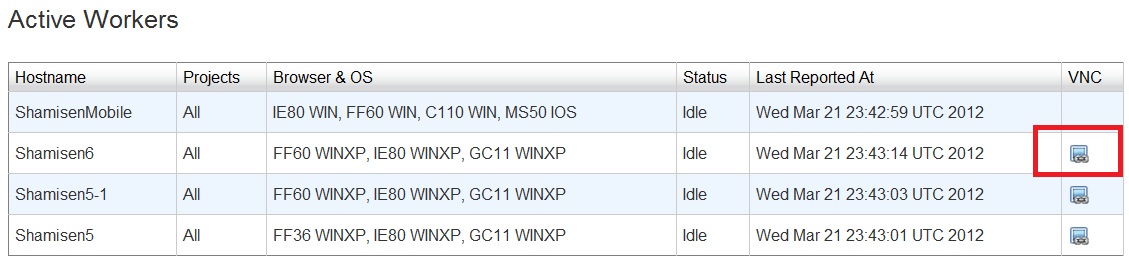

Building scripts that seem to work is easy (building scripts that do work can be hard). Check the logs & view the run manually while tests are being executed). Awetest allows you to watch you tests run via a VNC Viewer to help catch on screen errors that might be hard for a computer to recognize.

Figure 2: Clicking the VNC Link will show you the desktop of the agent (Shamisen) Machine

Results can be deceiving if all you are doing is looking at the logs. Awetest logs are different. They include helpful extras that help cut down on the tedious task of digging through debug messages to get to the true error.

These include feature like hide/show pass or fail and screen captures before, during, and after an error is recorded.

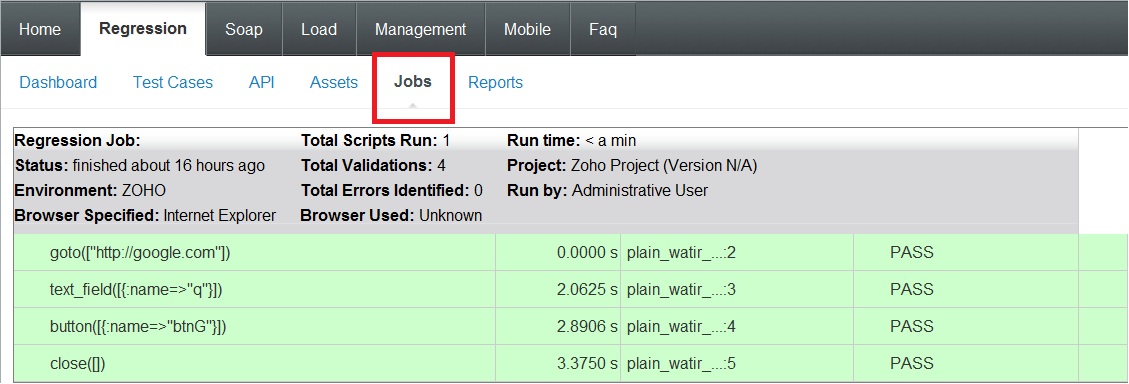

With Awetest you also have the ability to watch the log update in real time via the jobs page. Simple navigate to the jobs page, click the click for the job you just started and watch as the log is populated. This is great for first runs of scripts when you know something is bound to go wrong.

2.14 Keep it Simple!

When building test cases it is critical to keep things as simple as possible. Overly complicated test cases that test wide variations of the application will take much longer to execute and be much harder to maintain. Keep it simple by keeping test cases as small as possible, a max of ten unit tests to make a test case.

To make things even simpler, Awetest allows you to easily refactor encapsulated unit tests (i.e. Successful Login, Create Account, Delete Account) into a project utility file where they can be references in a single line in the test case. This means building new test cases can be as simple as three or four lines of code. And you can do this across the board, not only with Awetest scripts. In the below example we are leveraging a Sikuli Script and variable spread sheet stored in the projects Assets library. The highlighted line of script indicates the single line that is executing the entire Sikuli portion of the test case.

2.15 Clean the reports

If you notice a run has prematurely failed and you want to rerun the job immediately you can do so directly from the jobs page. There is a restart button in the same row as the job next to a delete button.

Clicking the restart button will clean out the reports logged for that job and then restart it.

This is very important functionality designed to keep the garbage data out of the reports and keep the error-rate overview analytics clean and true. (More on this in the upcoming publication of our best practice for Reporting)