Visit this post for an index for our Blog Series: Best Practices for Achieving Automated Regression Testing Within the Enterprise

Manage: Tips for Better Management and Maintenance of Test Cases

With maintenance on automated test cases taking up a good portion of our clients time, we thought it would be a good idea to layout some best practices for managing their regression-based automation with minimal effort and maximum effectiveness. This section is a continuance of this post in which we began to discuss the initial building out phase of a proper test automation implementation requirements. For a links to all articles in the series please use this link: Best Practices for Achieving Automated Regression Testing Within the Enterprise

3.1 Refactor

The definition of insanity is doing the same thing over and over again and expecting different results. At 3qilabs we don’t see you as insane, we just see you as a good regression tester. In fact we consider the reuse of unit tests to build out different test case scenarios a best practice of regression automation. Awetest, itself, includes an ‘Assets library’ where unit tests can be saved in a project utility file and then called in one line in your test script. This means that login method you use for every single test case doesn’t need to take up ten lines in your code, just one simple line. This ability to quickly and easily refactor unit tests into different test cases makes Awetest a powerful platform building and maintaining a proper regression suite for your application.

3.2 Run Often

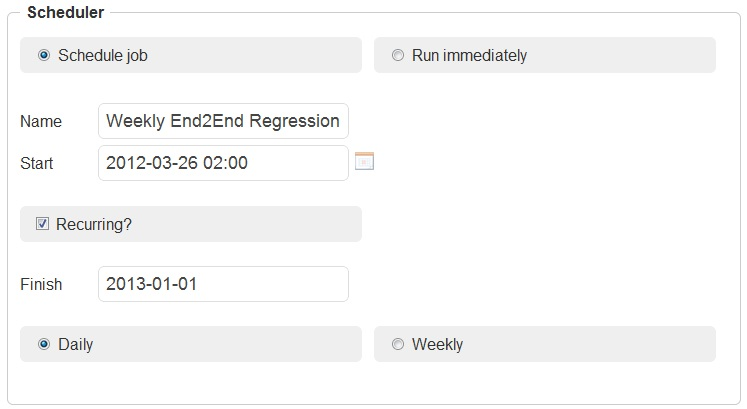

Keep a regular regression schedule that is both dependent and independent of release cycles. You should always run some sort of regression test after a new release has been moved up the development chain but you should also run regression tests at predefined schedule to increase coverage and spot issues that might come to light in the middle of a development cycle. Awetest’s scheduler functionality allows you to easily set up daily or weekly regression runs.

Points 3.3 , 3.4 , and 3.5 after the jump

3.3 Purge Often

Don’t fall in love with your scripts (applications change, treat your scripts as disposable – if there have been major changes in the application, it might be easier to start your script again from scratch. You may also want to create multiple version of a given script to test the application in different release states)

3.4 Revise Coverage

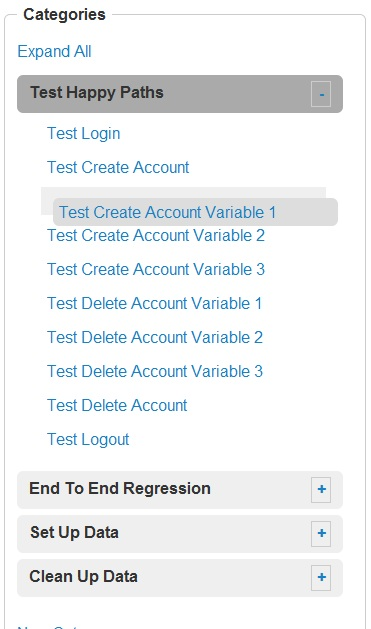

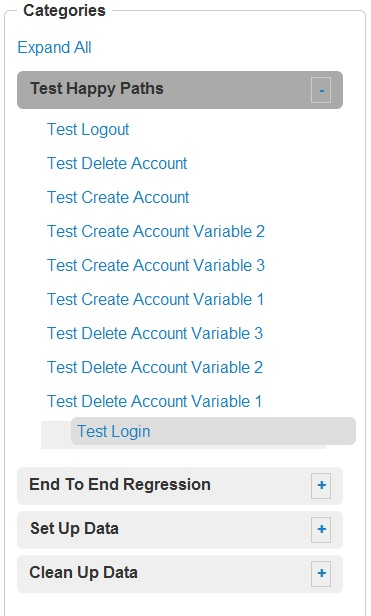

We typically recommend to our client to organize their test cases into test categories (more on this best practice in the build section). We recommend keeping a category for core functionality (or happy path) to test the mission critical areas of the application for faster development cycles where a full end to end regression run cannot be completed. Be sure to keep this type of test category up-to-date by adding or removing other mission critical test cases. You can easily reorganize you test cases from within Awetest’s Test Cases page. Test cases and categories have drag and drop reorganization functionality that lets you reorder test cases within a test category, move test case’s between categories, and rearrange test categories as you’d like.

3.5 Add Traceability

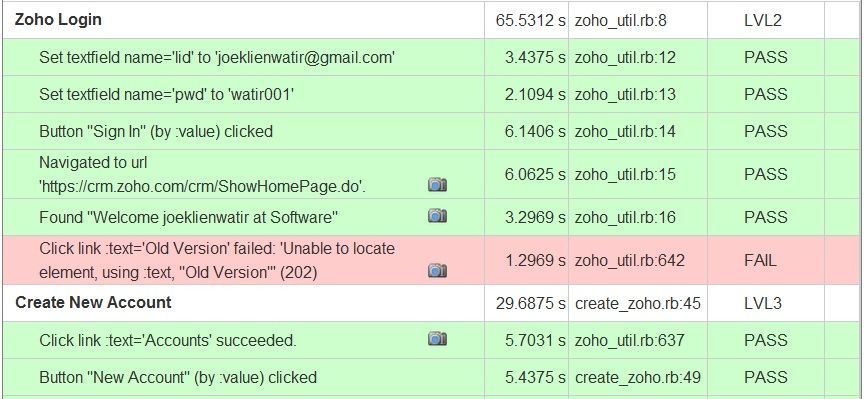

Typically, supporting product/test documentation will be maintained in the form of use cases, stories, test cases, etc. These supporting docs are typically stored in ALM systems like Quality Center and Rational, in portal apps like SharePoint, and many large organizations still use MS Excel to maintain and track these specifications. In our experience these documents tend to get large in both, number and size over the course of the SDLC. Awetestlib has a simple method called “MarkTestLevel” that allows you to insert relevant traceability and descriptive information for discrete sections of your script.

For example – if the script is covering multiple test cases (e.g. Login, Add Account, Edit Account, Delete Account) – each of these test cases should have an unique id which can be simply inserted into the MarkTestLevel command.